Logistic Regression

Last updated

Was this helpful?

Last updated

Was this helpful?

With deep learning, we can compose a deep neural network to suit the input data and its features. The goal is to train the network on the data to make predictions, and those predictions are tied to the outcomes that you care about; i.e. is this transaction fraudulent or not, or which object is contained in the photo? There are different techniques to configure a neural network, and all of them build a relational hierarchy between the inputs and outputs.

In this tutorial, we are going to configure the simplest neural network and that is logistic regression model network.

Regression is a process that helps show the relations between the independent variables (inputs) and the dependent variables (outputs). Logistic regression is one in which the dependent variable is categorical rather than continuous - meaning that it can predict only a limited number of classes or categories, like a switch you flip on or off. For example, it can predict that an image contains a cat or a dog, or it can classify input in ten buckets with the integers 0 through 9.

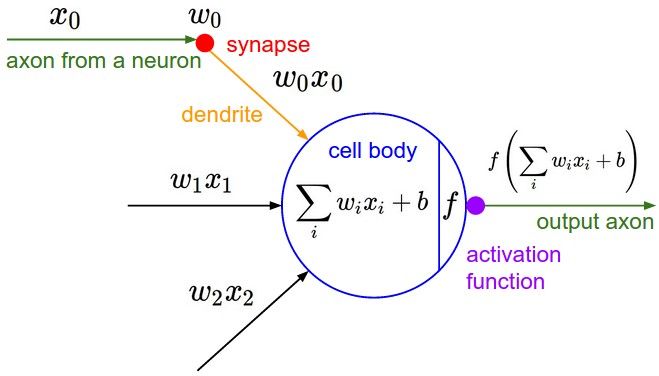

A simple logistic regression calculates x*w + b = y. Where x is an instance of input data, w is the weight or coefficient that transforms that input, b is the bias and y is the output, or prediction about the data. The biological terms show how this artificial neuron loosely maps to a neuron in the human brain. The most important point is how data flows through and is transformed by this structure.

We’re going to configure the simplest network, with just one input layer and one output layer, to show how logistic regression works.

We are going to first build the layers and then feed these layers into the network configuration.

You may be wondering why didn’t we write any code for building our input layer. The input layer is only a set of inputs values fed into the network. It doesn’t perform a calculation. It’s just an input sequence (raw or pre-processed data) coming into the network, data to be trained on or to be evaluated. Later, we are going to work with data iterators, which feed input to a network in a specific pattern, and which can be thought of as an input layer of the network.